The grand finale to the 2022-23 College Basketball Season is here. From this point forward, there's nothing left to it but to do it.

Over-seed/Under-seed -- Predictive

Let's start with this model. All of the previous years' models can be found on the Bracket Modeling tab above, as well as the explanation for the O/U/A notations.

This model is a relative comparison exercise to get a feel for the passivity or resistance of a region. Even though comparative octets are given, it does not mean the 2023 octet should match its equivalents in a pick-for-pick manner. There are slight differences between the matching octets, and other models may suggest different projections.

- ALA octet: A lot of similarities to 2014 ARI, 2021 BAY, 2021 MICH, and 2022 GONZ. In all four examples, the 4-seed is stronger than 2023 UVA, so that means less resistance this year.

- ARI octet: Compares to 2014 KU, 2017 DUKE, and 2019 UK. Two of these matches had key injuries (Embiid for 2014 KU and Washington for 2019 UK), whereas ARI does not.

- PUR octet: This is the only region that I could not find historical equivalents. The closest was 2014 WICH, which was a stretch, plus it matches much closer to another 2023 1-seed octet. Last year, there was no historical equivalents for 2022 BAY or 2022 UK............and history was made.

- MARQ octet: The best example is 2021 ALA, but I'm always skeptical of 2021 metrics due to the plethora of incomplete games/schedules. Other examples include 2017 ARI and 2016 XAV, but the lower seeds of 2023 are somewhat stronger than these two matches.

- HOU octet: 2016 KU or 2017 GONZ with a weaker 4-pod are the best comparisons. In every year except 2018 UVA and 2022, the top-rated 1-seed advances to the E8. HOU only fits one upset criteria for 2018 UVA, which is the Sasser injury, but since it looked more like a strain than a tear, his highly likely return will uncheck that box and any chance at a 16-seed over a 1-seed.

- TEX octet: The octets that best match are 2019 MICH, 2018 PUR, 2017 LOU, and 2014 NOVA. It was very difficult to find historical 2-seed octets where the teams are accurately seeded according to quality metrics like this TEX octet.

- KU octet: 2022 BAY, 2017 NOVA, 2014 WICH, and 2018 XAV if it had slightly stronger lower seeds. None of these comparisons bode well for KU's chances, and I posted a micro-analysis on this year's KU team, which explains why I think they are in trouble.

- UCLA octet: 2015 ARI and 2017 UK fit decently with this octet. The biggest difference is both of these matches ran into accurately seeded 1-seeds, which UCLA does not have to worry about.

National Champion Profile

Let's start with the initial rules: 1) Must be an 8-seed or better, 2) Must not lose their first game in the conference tournament (failures are red-texted), and 3) Must not have double-digit losses (failures have strike-through).

- ALA, HOU, KU, PUR

- ARI, TEX, UCLA, MARQ

BAY, XAV GONZ, KNST- UVA,

INDCONN,TENN - SDST, MIA STMY, DUKE

CREI,IASTTCU,UK- MIZZ, TXAM

NW,MIST UMD,IOWAARK, MEM

I have my reservations about keeping non-power conference teams (HOU, GONZ, STMY, SDST, and MEM) in this list, but since there are no restrictions involving conference affiliation (at the time), I have to include these teams in the model. Here are the 17 contenders in the National Champion Profile:

This table should be very familiar from the many articles I've written about the Championship Profile model. The only new column is 'Transfers,' located to the right of DQs. If you count horizontally for each team, you'll discover that transfers are not included in the DQ counts. Since I just added this metric to the CPM this year and I'm nervous it could break in its first year (especially considering how prevalent the transfer portal is in CBB), I have left it to the side. From this table and the disqualifier counts, here are the champion tiers:

- HOU and UCLA. If UCLA hadn't loss Jaylen Clark to injury, they would have had zero DQs because they would qualify as a 5O team. In fact, if UCLA were to win (with or without Clark), I would probably have to re-do the Post Production component again because several teams could be reclassified into a 6th archetype (2018 NOVA would be one team that could move in with UCLA). Since I'm really doubtful about post-Clark UCLA, I have to say the front-runner is HOU, with their only DQ being lack of competition. Yes, they have played ALA, UVA, STMY and MEMx3, but they weren't tested as much as 2014 CONN, who is the only non-P6 conference team to win a title. You may also notice an asterisk in their post-production qualifier. They actually have the taxonomy of 2012 UK, the ShDs and RbDs of 2007 FLA, and the post production of 2008 KU (all of which were 2W archetypes). As I teased earlier, I do have a metric available for conference affiliation of national champions, but at the moment, it is not an official component of the model (and if it was, HOU would have another DQ). Finally, HOU and UCLA had 13- and 12-game winnings streaks snapped with losses in their conference tournament finals. No eventual national champ entered the tourney with a winning streak that long (those streaks would be 14- and 13-game streaks had they won). 2013 LOU entered on a 10-game streak and 2008 KU entered on a 7-game streak. All others are 5-game streaks or smaller, with most title runs starting on a 0-game win streak (which both currently have). Good thing they both lost!

- ALA, KU, ARI, MARQ, UVA and MIA. Instantly, I would ignore UVA and MIA because they are lower in the efficiency ranks than previous champions (not a component in the model). I'm also worried about the injury status of MIA post-player Omier. KU has a tough path to the bracket, according to the OS/US model above. MARQ also has a difficult path but easier than KUs. ARI has a 2.5 because I only gave half of a DQ for being a 1-seed falling to a 2-seed. Only two NCs have fallen in seed-line from the previous year (1998 UK) and (2016 NOVA), and both fell from a 1-seed to a 2-seed like ARI. ALA checks off all the boxes except PG production. Only three NCs had fewer points than ALA and none had less than 3.5 AST, but I like them as a next-best option to HOU because they are one of only three teams to quality under post-production rules (HOU also being one). To be a champion, I think you have to be built like one.

- PUR, GONZ, CONN, and STMY. Instantly, I would write off GONZ and STMY as potential champs because of conference affiliation. If GONZ didn't win it in 2021 when they had every advantage in their favor, they may never win one. I don't like PUR's youth (and lack of athleticism) on the perimeter. CONN was playing like a national champion at the beginning of the season, and their flaws caught up to them. I feel like they've learned from their flaws, but that time spent in reverse may have cost them a title. I'm not sure about the fifth year rules for next season, but I think CONN can bring everyone back (assuming Jordan Hawkins doesn't go pro) except one bench player. If they make it to at least the E8 this year, then it removes a DQ for next year's team. The only thing remaining would be a conference title and team composition.

Over-seed / Under-seed -- Poll-based

Now, let's look at another OS/US model, but I warn you, this one is a little more risky than the other two. I've not been able to put my finger on exactly what this model is evaluating, but when nothing else works or something is needed to break a tie between two teams, the Poll-based OS/US model serves that purpose. Last year, it was a perfect 4-0 in R64 match-ups, and if it doesn't produce a lop-sided count in OS/US identities, it is fairly reliable. Let's see the model.

First things first, the model produced 18 OS identities and 10 US identities, which is an acceptable balance. The thing that catches my eye is only two OS/US match-ups. I'm always hoping for a lot of OS/US match-ups so that I don't have to work as hard figuring out the 50-50 match-ups. Anyways, the model favors CHRL over SDST and AUB over IOWA. Basketball speaking, you need to shoot good to beat the stingy defense of SDST and CHRL does shoot well (very few teams do this year). IOWA also shoots well, but AUB has good length and athleticism, which you need to contest shooters. They both have a margin of differential equal to 7 (4 -- -3 and 5 -- -2), so I'll probably go with both these predictions in my personal bracket. There are four match-ups where the teams are both OS or both US. This is what I've defined as a critical match-up, where one team has to win and one team has to lose, even though their US (OS) identity says they should both win (lose). There's nothing predictive about them, other than it says their R32 opponent is guaranteed to have either an over-seeded opponent (good news for PUR and GONZ) or an under-seeded opponent (bad news for KU and KNST). For the record, 2022 BAY, who was accurately seeded according to this model, was paired against two OS teams (UNC and MARQ) and lost their R32 match-up, so maybe not as good as assumed for PUR and GONZ.

Over-seed / Under-seed -- Conference-based

Next up, let's look at the conference-based OS/US model. Although I haven't had an opportunity to investigate the effect of unbalanced conference schedules on this model, it is a fairly reliable model when the number of OS/US predictions are under twenty. From the current count, it has eighteen predictions with a potential of two more depending on the outcomes of the Play-in Games (PIGs). Last year, the model was 14-3 with one no-decision due to a critical match-up (US USC vs US MIA) and all three misses came from the B10 conference.

The most important thing to remember about this model is predictions are based on achieving or failing to achieve seed expectations. For example, if a 1-seed is projected as a potential OS, then failing to achieve the F4 is a correctly predicted OS. Likewise, if a 1-seed is projected as a potential US, then reaching or exceeding the F4 is a correctly predicted US. The column headed E(W) is that seed's win-expectation. 1-seeds are expected to win at least four games, 2-seeds are three wins, 3- and 4-seeds are two wins, 5- and 6-seeds are one win, and 7- thru 10-seeds are 0.5 wins (the coin-flip seeds). You must also pay close attention to the wording of the notes. Most are worded as "OS Team A or US Team B". If either accomplishes the prediction, it doesn't matter what the other does. If Team A fails to achieve seed-expectations, then Team B can fail too. Or, if Team B achieves seed-expectations, then Team A can also succeed. Thankfully, there are no group-based OS/US scenarios like last year. Here's what I see:

- If

MIA wins one game, it doesn't matter what DUKE or UVA does. If DUKE

fails to reach R32 and UVA fails to reach S16, then it doesn't matter

what MIA does.

- If PITT fails to win in play-in game, then DUKE and NCST both become OS and both must lose. If PITT wins the play-in game and its R64 game, then it doesn't matter what DUKE and NCST does.

- If CREI wins one game, it doesn't matter what CONN does. If CONN doesn't win two games, then it doesn't matter what CREI does.

- If NW wins one game, then it doesn't matter what IND does. If IND doesn't win two games, then it doesn't matter what NW does.

- If ILL wins one game, then it doesn't matter what UMD and IOWA do. If UMD and IOWA both lose, then it doesn't matter what ILL does.

- Since WVU and UMD play against each other and both are OS, it is easier for the model to be correct if UMD wins (which forces ILL to win) than if WVU wins. (This scenario leaves a high probability for a mis-pick.)

- If ARI fails to reach the E8, then it doesn't matter what happens to UCLA and USC. If both UCLA reaches the E8 AND USC wins one game, then it doesn't matter what happens to ARI.

- If

TXAM wins one game, it doesn't matter what happens to UK. If UK loses,

then it doesn't matter what happens to TXAM, but TENN also can't reach

the S16. If TENN reaches S16, then UK and MIZZ must both win a game.

(Another high-probability scenario for mis-pick.)

- ARK identified as an OS and ILL looking like a potential US. This is what I would call a domino game for this model. If ARK wins, then ILL can't be US, so IOWA and UMD must both lose to confirm their OS identities, which means WVU wins and can't be OS. (A third scenario with a high probability for mis-pick.)

- Considering that eighteen predictions exist with a potential two more dependent on the PIGs, this would give a total of twenty projections, which is our fail-safe threshold for model reliability. Logically speaking, the more predictions a model makes, the more potential there is for conflicting predictions, for which we already have three examples. I would not predict my entire bracket with this model, but some of the predictions (the domino-less ones) seem safer.

Quality Curve & Seed Curve Analysis

There's a lot to look at here, so I'm going to keep the intros and the filler to a minimum. First, let's look at the QC compared to its minimum and maximum values over the games since last QC analysis.

This is honestly the first time I have seen anything like this QC. Compared to its max/min values, the current QC looks like a roller coasting, repeatedly bouncing up to the max and then following down to the min. For documentative purposes, here are some important details:

- The max of the Final QC is higher than the max of the Feb QC at #8-10, #14, #18-19, #22-24, #27, #34, #36-40, and #42 to #44.

- The min of the Final QC is lower than the min of the Feb QC at #1-7, #17-19, #22-25, #29, #36-40, and #49-50.

- The

over-lap of these widening regions are #18-24 and #36-40. In both of

these ranges, teams are playing closer to the minimum curve, with the

exception of teams #22-24 who are playing at the midpoint of the max and

min curves. For the record, these teams are UTST, MEM, ARK, DUKE, UMD,

IAST, and KNST for the #18-24 group and USC, IOWA, (OKST), PNST, and

MIA-FL for the #36-40 group.

I don't want to read into this situation because 1) I've never seen it before and have nothing upon which to base a hypothesis, and 2) There are a variety of interpretations. For example, these could be teams that are struggling (which is why they are playing at the minimum curve) and still headed lower, or these could be teams on the rise and filling in the gaps left by teams falling in the quality rankings, or these could be teams that have hit bottom and the tournament is a fresh start to turn upwards. I think a deeper dig into the eleven tourney teams could be a waste of time and probably an over-analysis of the QC and its function. Let's look at how the final QC compares to both the Jan and Feb QCs, both of which had their own issues.

Let's try to break down this eye-sore.

- At #2, #7-12, and #46-48, the Final QC is above the Feb QC. Everywhere else, it is below.

- At #9-10, #27-34, and #45-48, the Final QC is above the Jan QC, Everywhere else, it is below.

- Overall, the quality of teams are lower today than at either time of QC analysis.

For historical perspective, let's see how the 2023 QC stacks up against the last five tourneys.

As the previous two QC analysis have pointed out, 2023 matches 2018 the most, and 2022 is the next closest. Both of those years had a M-o-M rating over 20% as well as 13+ upsets (I define an upset as a seed difference of four or more). Here are the comparative details:

- 2023 is better than 2018 at #9 and #22-50, similar at #5 and #11-14, and lower at #1-8, #10, and #15-21. In simple terms, it is flatter than 2018, which implies more chaos than sanity. I've also realized that flatter curves produce more upsets in later rounds (2nd weekend upsets) than in early rounds (1st weekend upsets). For example, the round-by-round upset count of 2018 was 5-5-3-0-0-0. It was only the fourth time since 1985 that there were three upsets in the S16 (1990, 2000 and 2002). 2022 also had three upsets in the S16.

- 2023 is better than 2022 at #2-3, #5 and #16-50, similar at #4 and #15, and lower at #1 and #6-14. If the same logic holds, 2023 is slightly steeper than 2022, so it should be a little more sane than 2022 and could be implying fewer upsets in the early rounds. The round-by-round upset count in 2022 was 6-5-3-0-1-0, so based on both curves, 2023 could produce something like 5-4-3-1-0-0.

The only remaining piece of the puzzle: Did the Selection Committee properly appraise quality?

Here is the 2023 Seed Curve (SC). Yikes!

- Spikes at #2, #4, #6 and #8-10.

In 2022, only the 5-seed, 8-seed, and 10-seed of the SC were above the

QC, and all three met or exceeded group-based seed-expectations. 5-seed

HOU knocked off 1-seed ARI, 8-seed UNC knocked off 1-seed BAY and ran to

the title game, and 10-seed MIA ran to the E8 and was the only 10-seed

to win. I'm not sure the same fate exists for this year's out-performers

since 4-seeds, 8-seeds and 9-seeds are all competing in the same path

for wins. So, let's look more into that path.

- 1-seeds are

facing better seeds disguised as 4-, 8- and 9-seeds. In 2018, the

problem was weak 1-seeds, where two of the 1-seeds were 3- and 4-seeds

in disguise (the first was upset by a 9-seed and the second made the

F4). This problem of under-seeded competitors is more reminiscent of

2014. Two actual 4-seeds were 1-seed and 3-seed quality in disguise. Two

actual 8-seeds were 5-seed and 6-seed quality in disguise, as were two

9-seeds. The 8-seed with 5-seed quality upset the lowest (UK) ranked

1-seed (WICH) (as well as the 4-seed with 1-seed quality in the next

round). I hate to say it, this exact scenario exists in the 2023

bracket: 1-seed KU is the lowest-rated 1-seed, with 8-seed ARK as a

5-seed in disguise along with 4-seed CONN as a 1-seed in disguise. Good

job, Committee (sarcasm implied)!!!!!

- The seed-curve doesn't hold any regard for the 3-seed, 7-seed, 11-seed or 12-seed groups. Given the trouble that 2-seeds have had in the R64 for the past two years, maybe that curse is on the 3-seed group this year. With 10-seeds above expected quality and 7-seeds below expected quality, maybe the 1-3 record from 2022 flips around in 2023 for the 10-seeds. Likewise, stronger than expected 6-seeds against weaker than expected 11-seeds could flip around last year's 1-3 record.

- Yes, I'll say it: The Selection Committee dropped the ball this year, but they seem to do it every year, so 'they dropped it harder than usual' is probably more accurate. 2023 has weak 1-seeds (well, weakness up and down the curve), poor shooting metrics, and an inept Selection Committee -- and 2014 had these things too (along with the beginning of the horrendous Freedom of Movement philosophy). 2014's round-by-round upset count was 6-4-2-1-2-0 and its M-o-M ratings was 21.35%, both in line with those of 2018 and 2022. Enjoy!!!!!

Return & Improve Model

The assumption behind this model is chemistry can contribute to a deep run in March. If a team returns a certain threshold for key metrics, then they should improve upon the previous season's tournament performance. Here are the historical probabilities for this model.

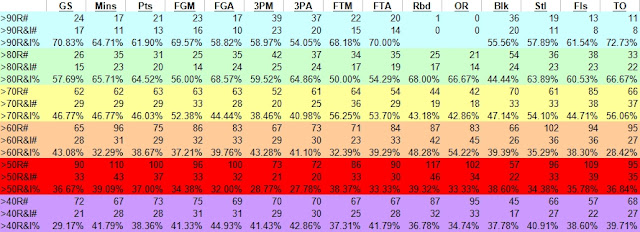

For the most part, points matter (and the various metrics that go into points -- fields goals, three-pointers and free-throws), with steals and minutes being the second-most important. In basketball, I would assume it is harder to generate points than defense, so returning a high percentage of your team's offense from the previous season should be of the utmost value. First, the probabilities get a little wonky at returning 40%. This may be the result of other factors (like talent, either one-and-doners or the transfer portal) influencing the improvement instead of chemistry. The data goes back to 2003 for most teams, and as far as 2001 for others, but maybe in the near future, I will re-examine the model to see the results with a recency bias. For notation, >90R# is the number of teams that return at least 90% of a specific stat category, >90R&I# is the number of teams that return at least 90% of a specific stat category AND improved on the previous year's performance, and 90R&I% is the latter divided by the former. Each successive row takes out the previous row's counts, so 80 only looks at returns in the range of 80.00-89.99%. Finally, the model does not include data from non-power conference teams, so GONZ and STMY do not have any data in the model nor are they examined below.

For documentation purposes, the only uncertainty in the 2023 data is the status of UK's Sahvir Wheeler. At the present moment, he is counted as a returner for UK, as the latest reports suggest he could play in the tournament. If it is discovered afterwards (either if this information was wrong or UK doesn't go deep enough to give Wheeler a chance to return -- similar to 2014 DUKE's Kyrie Irving) that he is not a returner, then UK's percentages will fall by approximately 12% in every stat except BLKs (no shocker there). Let's see the return and improve candidates for the 2023 season.

The data is sorted in descending order, with the emphasis on the PTS category. I did this manually because I didn't want Excel auto-sort to screw up my hard work. Here's what I see:

- TCU is the only team to return 80% of their points and steals, and most other categories. This model suggests they have a 60% chance to advance to the S16, an improvement over the R32 run in 2022. The only concern I have with TCU is their battle with the injury bug all season. They've played several different lineups throughout the season, and just recently, they had a player leave the team for mental health reasons (or else they would be close to 90% return).

- The next team is IND who returns 60%+ of their points and minutes and 58% of their steals. The model suggests only a 38% chance to return and improve, but since their threshold of improvement is simply winning one game, it seems doable.

- The next group is AUB, IOWA, CREI and TEX, who return 50% of their points. The model suggests a 37% chance of improvement. CREI and TEX both need to win two games to achieve improvement. AUB and IOWA are tourney opponents, which mean one has to lose (aka - a critical match-up). AUB must win two whereas IOWA only needs one win, but if AUB defeats IOWA and then loses to HOU, then both fail to improve. I also want to point out that both AUB and IOWA fell in seed line (which is usually a bad omen in this model) whereas TEX and CREI both improved upon their seed line.

- The final group is TENN, MIST, USC, UK, BAY, MIA, ARI and MARQ, who return 40% of their points. The model concludes that this results in a 38% chance of improvement. Since we have eight teams, this means three of these teams -- on average -- will improve upon their 2022 tourney performance. MIST and USC play in a critical game. Only MARQ and MIA improved their seed line of these eight teams. MARQ only need one win to improve (this is probably one of the probable three) whereas MIA needs four wins (this is probably one of the five failures). UK plays in critical match-up against PROV, who only returned 25% from last year. UK only needs one win to improve whereas PROV needs three.

- The one that concerns me the most is obviously HOU. They only return 30% of their previous season's production, which is a combination of four players graduating and two starters coming back from injury-shortened 2022 seasons.

- Three of the four 1-seeds are in this range as well, with KU barely returning 25% of last year's championship production. Only one defending champion has advanced past the S16 since 2001, and it was 2007 FLA, returned 90%+ of their 2006 championship team and repeated. This is another strike against KU.

Meta Analysis

What qualities (four factors) do tournament teams possess and which teams possess the qualities to beat them. In theory, if your opponent is bringing rocks, you want to bring paper, and so forth for paper/scissors and scissors/rock. First, I want to look at the historical comparison of four factors.

The top half of the chart is all 66 teams remaining in the tournament (the data of teams in Wed play-in games are still included). There's nothing really that stands out from the annual decline in quality metrics. In the lower half of the chart, I took out the values of the 13-16 seeds to avoid distortions by lower-quality competition. The stats in green improved significantly, meaning the Top 12 averages are better than the Top 16 averages. For 2PD% and 3PD%, the increase is actually a bad thing because it means defense is worse. With historically weak 2PD%, a potential meta play is 2P% teams. Let's take a deeper look into this tournament's meta.

This chart shows how many Top 20/40/60/80/100 of that stat is present in the 2023 tourney, and the totals are cumulative (so 21-40 is actually 21-40 added to 1-20). Now, the real matter of importance is the red and green. Green represents historical high counts, and red represents historical low counts. 2023 posting four historical lows.

- Even with weaker 2PD% (from the first chart), 2023 has 22 of the Top 60 2P% teams to go along with 19 of the Top 60 3P%. As I've said all year, shooting is down this year and even fewer of the elite shooting teams made the tournament.

- To make matters more confusing, 2P%D and 3P%D counts are close to historical lows. This leads me to think elite shooting teams are worthwhile picks. With fewer teams to match their elite shooting and fewer teams to "elitely defend" their shooting, elite shooting teams are a meta-play. These teams would include 2-seed ARI (9,14), 15-seed COLG (7,1), and 3-seed GONZ (2,12) with (2P% rank, 3P% rank) as my notations (and each of these have conflicts with other models). I'm less confident in ARI's shooting numbers because of certain Ls this season where they went cold. Also, ARI probably faces UTST (34,9) in the R32. Other (less elite) shooting teams include ORAL (9,34), MIA (21,41), XAV (37,3) and a long stretch in PNST (51,9).

- The other noteworthy meta-play concerns turnovers. 2023 has a record of 20 Top 60 TORD, which means 1/3 of the teams in the field defend by taking the ball away from the opponent. Looking at the elite teams (Top 30), five are in the HOU regional, four in the KU regional, two in the PUR regional, and one in the ALA regional. There's no better way to stop a good shooting team than taking it away from them before they shoot it. If you want to play against the meta, good shooting teams with elite ball security (in order to get off a shot) may be the way to go. Of the shooting teams listed, GONZ (10th), COLG (21st), PNST (5th), and ORAL (1st) in TOR.

- For reference, last year, I called for 2P% and TORD to be the meta-plays, and predicted E8 runs for TEX and MIA. MIA worked, TEX not so much, but they would have made a better opponent for St Peter's than PUR did.

Seed-Group Loss Table

Since I didn't get to do extensive back-testing on this model and since there aren't any clear and obvious signals, I'm not going to post a full analysis with this model. Here are some brief takeaways though.

- The data does not include 2021 as many games were cancelled due to health concerns, and this has a significant impact on loss totals.

- 418 total losses among all the 1- thru 12- seeds. This is the 2nd highest total, the highest being 2018 with 419 and the third-highest being 2016 with 417. Not good company for 2023, and the fourth-highest is 2011 with 406. Two of those three years didn't have a 1-seed national champion.

- Speaking of 1-seeds, they recorded their second highest loss total with 20 total losses for the year. This ranks 2nd to 2016, which had 23. These 20 losses count for 4.79% of the 418, with only two years posting a higher percentage: 2003 and 2016. Both years only had one 1-seed in the F4.

- 3-seeds set a record year for losses with 33. However, those losses only accounted for 7.895% of the 418 total, which ranks fifth. This speaks to how weak the whole field is in 2023: The most losses ever by the 3-seed group but only ranks fifth in percentages-vs-field.

- 4-seeds tied two other years for most losses with 44. 6-seeds set a record with 47, and 8-seeds tied a record with 45. You would think 2023 would have shattered the all-time total with this many seed-groups hitting record highs, but the 5-seeds and 12-seeds tied record lows with 28 and 21 losses, respectively. These new highs and new lows are another reason why I don't trust the model just yet because I'm not sure it is reliable in normal years, let alone crazy ones like 2023.

This is all for tonight. I will try to get on in the morning and post my final predictions, but I still have a lot of work to do on my bracket and see if there are any worthwhile contests to enter.

Final Predictions

National Champ: HOU with ALA, UCLA and UK in F4 (somebody from the bottom-half of that region gets a cake-walk to the F4, probably PROV since I chose UK).

Model Picks:

- CHRL over SDST and AUB over IOWA, Poll-Based OS/US

- TCU to S16, Return & Improve Model

- UTST over MIZZ, Predictive OS/US

- R64 Ws for PNST and ORAL

- KU not to advance past S16 (actually have them losing to ARK).

- Round-by-round upsets: 5-4-3-0-0-0 (really hard finding upsets given the teams I advanced).

- I wouldn't be surprised if our first 14-seed made the S16, but I wasn't comfortable with any of them against 6-seeds. I also wouldn't be surprised if a 1-seed didn't win this year. But lack of quality on the top 3 seed lines suggests 1-seeds are still safest route.

- For the first five years, I went with curve-fitting strategies to pick my bracket. This year and last year, I went with a conference-based strategy. I haven't shared any details of this strategy, but the inspiration for it can be found in the wikipedia pages of the tournament years (just in case you want to get a head start on me next year!!!). Anyways, I expect good things from the SEC. They have favorable pathings (ARK, UK, ALA), they have several over-seeded opponents (one of which you already know I have knocked out in the first-round), they are due for a bounce-back year after six teams in 2022 (all 1- thru 6-seeds) and only five wins to show (three by ARK alone). That should explain my F4.

This was the first year where I thought my models didn't provide a lot of certainty/clarity for the tournament. It's why I spent a lot of time chasing down rabbit holes to no avail. It could just be the lack of quality in the tournament, and not lack of quality in the models. Only time can answer that question. Good luck, hope I could help this year (although I don't feel like I did), and as always, thanks for reading my work.