If you are a regular reader of the blog, the title speaks volumes, but more on that at the end of the article. Let's dive into the final article and pop-out some grades.

OS/US Predictive

Model Grade: B Scientist Grade:C+

In my opinion, this model was a very good starting point for filling out a bracket. By "starting point," I mean it would have got you in the ballpark of the final outcome.

- ALA: Projections for E8, NC, E8, and S16, and actual result was S16.

- ARI: Projections for R32, R32, and E8, and actual result was R64.

- PUR: No projections due to lack of historical equivalents, but history was made when there was a lack of historical equivalents (2022), and the actual result was history being made (16 def 1).

- MARQ: Only one projection for S16, and actual result was R32.

- HOU: Projections for E8 and NR, and actual result was S16.

- TEX: Projections for S16, S16, R32, and R32, and actual result was E8.

- KU: Projections for R32, R32, R32, and R32, and actual result was R32.

- UCLA: Projections for E8 and E8, and actual result was S16.

In 5 of 7 projections (ALA, ARI, MARQ, HOU, UCLA), the statistical mode was one round further than the actual result, which is why I used the phrase "in the ballpark." As for the other two, 1 out of 7 was spot on (KU) and the other was an over-achievement (TEX). The PUR projections were indicative of a bad omen (another 16-seed over a 1-seed), but in all honesty, I wouldn't have predicted it just like I wouldn't have predicted a 15-seed to the E8 in 2022 from no comparable history. I gave the scientist a lower grade than the model. I did use this model in combination with another to form a big picture prediction, while filling in the gaps with other models. If I had paid attention to the statistical mode of each octet and built upon that starting point, my picks could have been much better than they were. This is something the bracket scientist should notice.

National Champion Profile

Model Grade: D Scientist Grade: C+

This model bombed for the 2023 tournament. The eventual NC was a Tier 3 choice, the two Tier 1 choices did not advance past the S16, and five of the six Tier 2 choices (MIA being the exception) combined for 4 total wins in the tournament. I had a feeling this model would break (for a lot of reasons), but I stuck with it for my national champion pick (nothing else). I gave the scientist a higher grade than the model because I didn't give this model any weighting in my bracket beyond the NC, and that still cost me four games. I also made this quote in the Taxonomical Approach to post-production:

WFs have three of their four since 2016 (the beginning of the PPB era). In the same mind as Rule #4, newer identities and newer pairings are very good reasons to do this overhaul and stay up-to-date on the ever-evolving profile of National Championship contenders.

As innuendo as I could have made it, just because two WFs have never won a national title together doesn't mean it will never happen. New pairs happened more frequently since 2010, so it was only a matter of time. I will talk more about 2023 CONN and the evolution of the game in a later section.

OS/US Poll-Based

Model Grade: C Scientist Grade: C

As always, this is my tie-breaker model (it's not one of the first ones I look at or use). If I don't have a lot of reliable model picks (which was the case in 2023) or a lot of conflicting models (less the case for 2023), this model breaks those ties. This model only had two picks for 2023 and was 1-1, which I would consider B or B- range for a tie-breaker model. However, the one miss cost an eventual F4 participant, so I had to tick it down a few grades, and I only felt it fair to give the scientist an equivalent grade, as if the scientist and the model walked off the cliff together (which sounds like the setup for a good joke).

OS/US Conference-Based

Model Grade: B+ (maybe even A-) Scientist Grade: B-

My best advice was "do not pick your bracket with this model, but some of the domino-less predictions seem safer". If you followed this, the only missed pick it produces is a TXAM win. While the scientist's advice was worthy of a grade in the A-range, I gave the scientist a lower grade because I did not follow the rules of this model in my personal bracket.

QC and SC Analysis

Model Grade: B+/B Scientist Grade: C

The real bright spot of the QC/SC Analysis was the comparisons to 2014. Most models were showing similarities to 2022 and 2018 (and rightfully so), but 2014 had more relatable elements to 2023: No 1-seed in the NC title game and an entire octet practically mapped by it (2014 WICH vs 2023 KU). The short-comings of the model have to do with the prediction of under-performance by 3-seeds and 7-seeds. Not only did 3-seeds out-perform their seed-group expectations (9 actual wins versus 8 expected wins), but they won more games than the 1-seed group (9 vs 5). The key takeaway is any tourney's QC/SC analysis with similarities to both 2014 and 2022 should annihilate 1-seeds early and often. 2014 lost a 1-seed in R32, S16 and E8 while 2022 lost a 1-seed in R32 and two in S16 (similar to 2023).

Return-and-Improve Model

Model Grade: B Scientist Grade: B

Overall, this model did pretty well. I reduced the scientist grade from the A-range because I deeply regret not discussing group-based probabilities in the final write-up (I think I discussed this in one of the articles on the R&I Model). Overall, my concerns were mostly validated. TCU statistically could have been an R&I team, but I was worried about their chemistry and cohesion. My concerns with AUB and IOWA falling in seed-line proved to be accurate, as neither hit the "improve" threshold. The 40% group was the biggest shocker. On average, teams in this R&I-range improve about 38% of the time (approximately 3 out of 8 times), and coincidentally, this group had eight teams. The shock came as 5 out of 8 improved instead of 3 out of 8, especially considering one of these teams had to make a F4 run in order to achieve "improvement." For my personal bracket, I picked a lot of these games right, but I don't remember if I picked them specifically because of this model.

Meta Analysis

Model Grade: B- Scientist Grade: C+

The meta plays as determined by the scientist were elite 3P% and elite TOR, which called for GONZ as a S16 minimum, and COLG, PNST, and ORAL as R32 minimums. In my first scrubbing, I had 10-sd PNST advancing to S16 and losing to 11-sd PITT, but I had to dial back PNST to bring my bracket closer in-line with the Aggregate Model projections. I ultimately went with PNST, ORAL and GONZ to R32, giving more weighting to data-driven models over theoretical models like this one. ORAL was a total miss and GONZ exceeded minimum expectations, even though I had them losing to TCU based on the R&I model.

The scientist got a lower grade for coming up short in two areas. First, the determination of meta plays was missing a few elements. Elite TOR was probably the best call, as five of the E8 teams were in the Top 70 TOR (GONZ, TEX, CREI, FAU, and MIA). Elite 3P% was a decent call, as four of the E8 teams were in the Top 75 (GONZ, CREI, FAU, and MIA), but elite 3P%D was just as good if not better as three of the F4 teams were in the Top 70 (CONN, SDST, and FAU) along with an E8 team in KNST. Theoretically, if shooting is down across the entire field of 64 like it was in 2023, then elite 3P%D should slam the door shut on an opponent getting hot from downtown (the so-called great equalizer effect, something I truly hate in the game of basketball). Another missing element is elite DRB, as five of the E8 had Top 77 rankings (CONN, GONZ, SDST, CREI, FAU). Again theoretically, if shooting is down across the tournament field, then preventing easy points by rebounding the opponent's missed shots at a high rate should be another key factor in meta picks. The second area of short-coming for the scientist was terminology/thresholds. In the Final Analysis, I used the word "Elite" very loosely but settled for "Top 50 ranking" as the defining threshold for "Elite" (I've also used it pretty heavily in this section as an attempt to keep consistency between this article and the 2023 final article, but ultimately, the goal is to bury the word "Elite"). In past tournaments as well as 2023, Top 64, Top 75, and Top 80 have been key statistical thresholds for E8 teams. In stronger quality years, there should be higher thresholds. In weaker years like 2023, the thresholds can be lowered from Elite (Top 50) to tournament quality (Top 64 or Top 68 depending on how loose you want to define it) or historical quality (Top 75-Top80). Even looking at CONN, they were 88th in 3P%, barely missing the aforementioned cut-off for historical quality. Properly defined thresholds is definitely a lesson learned from the wildness of 2023.

Seed-Group Loss Table

Model Grade: N/A Scientist Grade: B-

Simply put, I should have followed the key takeaways from this model. Every model was suggesting weakness. This model in particular suggested no more than one 1-seed would make the F4, and based on historical matches with 2014 and 2016, a 66.7% chance that a 1-seed wouldn't be the national champ. I foolishly went with two (ALA and HOU). I could have significantly minimized the damage done to my bracket, but I couldn't figure out how to apply the matching model or the regression model to the rest of my models. Since this model is still in the works, I relegated it to the sidelines for predictive purposes (thus, no grade given).

Final Predictions

Model Grade: B to C+ Scientist Grade: C+ to C-

In all honesty, the models were very insightful this year, but not very helpful. Excluding the implosion of the Champ Model and the tie-breaker status of the poll-based OS/US model, the rest of the models held up pretty well, if only the scientist used them more wisely. The 5-4-3-0-0-0 Agg Model was a bad call, as the final count was 4-3-3-1-0-0. Not only was it incorrect to predict five R64 upsets, but four of my five R64 upsets were 3- and 5-seeds, who were 8-0 in 2023R64. Imagine throwing out a NR, a F4 and an E8 team all in the R64 just to hit five upsets. This is why I've abandoned curve-fitting strategies because you are double-punished when you are wrong. As for the conference-based strategy, I simply chose the wrong conference to out-perform: I should have chose the BEC instead of the SEC. If you are interested in the conference-based strategy, look at the record-by-conference section in every tournament year page on Wikipedia. It gives the round-by-round wins for each conference along with a winning-percentage. (IMPORTANT NOTE: The Wikipedia pages account for wins in the Play-in games, but in my rendition of the model, I do not count these wins in order to maintain statistical comparability with the pre-2011 years that did not have play-in games). For the most part, the winning percentages of the power conference teams stay in the same range, so it provides a maximum/minimum quality check on your bracket. Likewise, the number of teams from a conference is usually an indicator of performance: Conferences with 3 or less bids and 7 or more bids usually under-perform (bottom three in win%), whereas those with 4 to 6 bids usually out-perform (above 60% win%). The most important detail of a strategy is that it isn't meant to be predictive, it is meant to be a guideline (a way to examine/control going too far or not far enough with certain teams to which you may have a bias). As a hypothetical example, after you produce your first bracket for scrubbing, you realize that Power Conference X has five bids and you only have one win for all five teams (Win% = 20%). Very rarely does a power conference win collectively less than 40% of their games (only 7 times out 90 attempts since the 2008 tournament). Of these seven times, only one time (2013 B12) has been a 5-bid power conference (two were 3-bids, two were 7-bids, one 1-bid, and one 4-bid in 2023). These numbers demonstrate the higher likelihood of under-performance of conferences with 3 or less bids and 7 or more bids (5 of the 7 under-performances).

The Curious Case of Crashing Connecticut

As I foreshadowed earlier in the article, I want to talk a little bit about the National Champ. First, there was not a clear favorite in 2023. CONN was a Tier 3 contender according to the Champ model because they did not win a conference title, they did not have a coach advance to the E8 in a previous tourney, and they had a taxonomy mismatch plus deviations in shot distribution and post-production from previous champs. However, I did write an in-season micro-analysis article about them because I thought they were the only title contender midway through the season. If you saw the way they walked through the Phil Knight Invitational, especially the utter dismantling of 1-sd ALA, you would have thought the same thing. When they hit conference play, they hit a wall of resistance, part of which was self-inflicted and part of which was opponent personnel. I did an excellent job in the article of analyzing the first part: The sudden onset of the turnover bug during that losing stretch was a key factor. While I noted the fall in shooting percentages, I never looked for an explanatory factor: Opponent's personnel. CONN ran 20% of their shots through SG Jordan Hawkins, which is a good idea if you have a player like him who eventually becomes a lottery pick. However, the BEC was loaded with players who could match-up with Hawkins:

- XAV Colby Jones was 34th overall in 2023 NBA draft and XAV swept CONN.

- MARQ Jones and Joplin helped MARQ win 2 out of 3 matchups vs CONN, as both are 6'5 and 6'7, but I can't remember which was the primary assignment to Hawkins.

- CREI Trey Alexander helped CREI split the regular season with CONN and he is similarly built like Colby Jones and will likely get drafted in the same range as Jones.

- PROV Devin Carter is a quick guard able to navigate screens to maintain contact with Hawkins.

- JOHN AJ Storr (a 6'6" 200lb guard with height and length to frustrate Hawkins)

- HALL Richmond, Odukale and Ndefo were rotated in guarding Hawkins to keep fresh legs on him, and they could switch interchangeably onto Hawkins when their assignment screened for him.

- Even GTWN and NOVA had matchable players to guard Hawkins, although these teams weren't quite good enough to match CONN across the board or get a win against them.

Match-ups matter! In CONN's path to the title, guards like these did not exist. The best possible match-up was ARK Ricky Council, who had the size (6'6) and athleticism to stay with Hawkins plus four days to prepare for the match-up (although I did not watch much of the 2023 tournament for personal reasons). It's no surprise that Hawkins's worst performance in the tourney was against ARK's length and athleticism. No one else -- GONZ Julian Strawther, STMY Logan Johnson, MIA Miller or Poplar, or SDST Bradley -- had the measurables or the individual defensive skills to contain Hawkins. If I could have perfectly forseen these five opponents (especially MIA and SDST in the F4 and NC games), I could have easily predicted that Hawkins would go 21 out of 42 from 3-pt land in the tourney. In fairness, I did have CONN in the E8 through STMY and ARK because I thought they were a lock to be National Champions in 2024 if everyone stayed and they made an E8 run in 2023.

PUSH & PULL

Now, we come to the difficult part of the article. If you are a regular reader of the blog and you have keen attention to detail like myself, the title of the article implies a significant change for PPB. Past season-opening articles were titled as "Welcome to the {insert current season}" whereas this one says "Review of the...." Yes, you guessed it: There will not be a 2023-24 season of PPB (for subsequent years, I don't know yet). This decision was actually simple to make, but the reasons are two-fold: A push factor and a pull factor.

The push factor is none other than the current state of the game. College basketball has been changing rapidly over the last ten years, probably more than any ten year period. So much so, the product on the court is almost unrecognizable from the same product on the court when I started in 1998. I absolutely despise the way the game is played today (especially at the NBA level), and I despise it so much that I even gave it a name: Trashketball. It is better known as ball-screen offense: One man dribbles, one man ball-screens, and three men stand outside the 3-pt perimeter and do absolutely nothing. The NBA affectionately calls it "creating spacing," but when you stand in one spot and only move if the ball is passed to you by the one man dribbling, then you are affectionately "doing nothing." When you've seen basketball played through Dean Smith's motion offense, Pete Newell's reverse-action offense, John Wooden's high-post offense, Pete Carril's princeton offense, Bob Spear's shuffle offense, Rene Herrerias's flex offense, and the variety of off-ball screens and cuts that these systems have introduced to the game, then today's game looks, feels and plays far inferior to these. Even NBA practitioners prove my point when they argue the superiority of ball-screen offense: "Ball-screens are the highest form of basketball because they force the defense, particularly the ball-defender and the screener-defender, to make a choice." If you accept this argument as fact (and yes, there is some truth to it), then how much more dangerous will your offense be if the remaining three offensive players ran 1 off-ball screen (3rd and 4th player) and 1 off-ball cut (5th player)?

- With trashketball, off-ball defenders have a two-option choice: 1) Stay attached to the shooter on the perimeter or 2) help protect the paint against dribble penetration from the on-ball action.

- With off-ball action supplementing on-ball action, off-ball defenders now have to make a choice with more than two options, and these choices have to be in coordination with all other defenders. By the NBA logic that claims forcing the defense to make a choice results in a superior form of basketball, then each off-ball defensive player being forced to make a multiple-option choice which also must be in coordination with the other choices of the other four defending players should produce a form of basketball superior to the binary choice of plain-vanilla ball-screen offense!!!

- By also running off-ball action instead of just on-ball action, you are running an offense that looks more like a motion offense or a reverse-action offense instead of trashketball. So yes, the illogical foundations of the current basketball ideology is taking basketball backwards, not forwards.

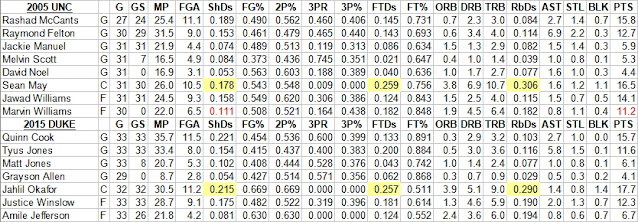

- Not that one example fully proves my thesis, but it is rather proof-positive when considering the fates of 2023 CONN and 2023 UNC. 2023 CONN had a offense mixed with set plays (usually Hawkins running through multiple off-ball screens to get an open 3pt shot), ball-screen continuities (slightly more advanced ball-screen offense than simple trashketball), and dummy sets (alignments and actions that appear to be one of their set plays/continuities in order to bait the defense into over-defending something that isn't there or isn't going to happen). 2023 UNC ran trashketball: The ball-screen offense initiated through RJ Davis or Caleb Love with Armando Bacot ball-screening and the remaining three players "doing nothing." CONN won the tournament while UNC was left out of it. Yes, I am fully aware that the 2022 UNC team also ran trashketball and made a run to the title game. When you compare the game logs of the two UNC teams (22 vs 23), you will see that 2022 UNC warmed up from 3pt land and 2023 did not (35.4% vs 30.4% on approximately 24.6 3PAtt). This is the great-equalizer effect that I mentioned earlier: Made 3s are a good way to cover-up the blemishes of playing low-quality basketball.

Trashketball is inferior to other forms of basketball for another big reason that I have yet to mention up to this point. It requires a specific set of rules in order for it to be feasible. Horrendous rule sets like the "freedom of movement" philosophy reward Dribble-based offenses (like trashketball) with free throws by calling fouls on defenders from contact that was initiated by the offensive player, yet passing-based offenses are only rewarded with the fruits of their labor because they don't seek out contact. The rules dictate that if you play 'this way', you are more likely to get free throws, but if you play 'that way', you are less likely to get them. That is not how fouls should be determined, but it is a big reason why teams are shifting away from older, more skill-oriented offenses to low-quality, low-skill, low-output offenses like trashketball. For all of the college basketball geniuses that can't figure out why scoring is down year after year, it is down because low-quality basketball is being incentivized and rewarded. Trashketball also needs an extended three-point arc to "create spacing." If the arc is 19'9" from the basket, off-ball defenders only have to go as far as 19'8" from the rim in order to protect the basket (and really they don't even have to go out that far until their assignment gets the ball passed to him). When the arc is extended, defenders have to stay further out if they want to disrupt stationary shooters. Whether it be the motion, the reverse-action, the princeton, or the high-post offense, none of them needed this artificially created spacing to win NCAA championships. They created the spacing themselves and highly skilled players executed them to produce high-probability scoring opportunities. After all, scoring was high when these systems were in play, and it has been falling since the game has shifted away from these higher-quality styles to the current lower-quality style of trashketball. When rule sets have to be designed and implemented in order to make this style functional, then the current style clearly is an inferior style of play to those styles that didn't need these rule sets and also scored better without them! Yes, this long-winded rant explains why I've felt like college hoops has been pushing me to other avenues of interest, but this push factor has been around for a while, it wasn't until the arrival of the pull factor that I made this decision.

The pull factor that is pulling me away from the blog is real life priorities. 2023 was not a good year for me in real life (or with my bracket to say the least). If this tells you anything about life, I'd rather have 2020 back than have to go through 2023. On the Friday before Selection Sunday, I had close family member pass away and the funeral was on Thursday's opening games. Then a week later, an immediate family member was diagnosed with cancer and has been on chemo since April. These two events were the reasons I didn't watch much of the 2023 tourney. Then in early October, another immediate family member was diagnosed with cancer, and they started chemo last week. As a result, my free-lance as a college basketball writer/analyst/philosopher has to be replaced with care-taking duties. If not for the pull factor, I probably would have continued for another year.

FINAL PREDICTIONS

Well, if you've made it this far in the article, I do have something left for you: My premonitions of the 2023-24 season.

- The Return & Improve Model may be a very good tool for next year. Going by the preseason top-25, DUKE, PUR, MIST, MARQ, and TXAM return close to 80% of their minutes from last year (which probably means they will return 70-80% of their production). This means DUKE should be good for 2 wins, PUR should be good for 1 win, MIST should be good for 3 wins, MARQ should be good for 2 wins, and TXAM should be good for 1 win, as long as they don't suffer injuries, drop in seed line from last year, and don't run into critical match-ups in the bracket.

- FAU returns 90% of their F4 team from last year. I am very skeptical of smaller conference teams when it comes to Return & Improve, which is why I don't include their results in the model. They move to the AAC from CUSA where MEM and UNT present as their only challenges. This year, they aren't a surprise team, they will be the hunted instead of the hunters, and I'm not sure how much more potential they have to unlock as players and as a team (especially compared to power conference teams). If you think Dusty May is the second coming of Brad Stevens (2010 and 2011 BUT), then pencil them into the F4, but I would watch them closely all season before doing so.

- If you dig deep in my blog, I've said Tom Izzo is one of the best tournament coaches, if not the best because every four year player that has played with him has reached a F4. I'm not sure if this achievement still holds true given the fifth-year Covid rule as well as the transfer portal, but we are due for another Izzo F4 run (last was 2019) and his team has already been mentioned in Point #1. I'm proclaiming it before the season starts that MIST goes to the 2024 F4.

- CREI has a good chance to be the best shooting team in the NCAA next year, with high-percentage shooters at every position 1-4 and from the bench. They return approximately 60% of their minutes and reached the E8 last year, which removes a disqualifier from their NC profile for this year. Their defense is my concern, especially at the point of attack since Nemhard moved to GONZ and will likely be replaced by UTST's Ashworth. If the NBA pays up for elite shooting percentages, then it's a good enough reason to pay attention to shooting numbers (as I've done for years).

- If there was one model that I would consider changing or implementing better, it would be the National Champ model. The simple rule, as I've jokingly stated in a few National Champ Profile articles, is take a Tier 1 contender or take CONN. In years that the model has worked, a Tier 1 contender has won it all, but in years (2011, 2014, and 2023) that the model has 'broke', CONN has won it all. This simple rule isn't scientific at all, but I still like the principles on which the model is based. Instead, I would focus on cleaning out non-contenders (Tier 4 or lower), and let some of the other models have a say in the process as well.

- Just a friendly reminder, defending national champs do not advance past the S16 unless they return close to 90% of their roster. CONN is returning just 40% of their minutes and production from their 2023 title team. You know the drill.

- Finally, I wouldn't post a long-winded rant about trashketball without leaving some advice on how to bracket pick around it. Again, match-ups matter! Even though I suggest these teams may employ a specific style, the coach may think otherwise. The key is personnel, and if personnel can fit a lot of different builds, then they can match-up with a lot of different teams.

- Tower defense: Teams with a tall big man that don't want him navigating screens on perimeter will leave him in the paint and force the guard to chase the ball-handler over the top of the screen in order to semi-contest any 3-pt attempt by the ball-handler. 2021-22 ARI employed this style with Christian Koloko. Top 25 teams that could fit this build include KU (Dickinson), PUR (Edey), CONN (Clingan), CREI (Kalkbrenner), GONZ??? (Gregg), ARI (Ballo), UK (Bradshaw), TEX (Shedrick), USC (Morgan/Iwuchukwu), STMY (Saxon), and ILL (Dainja). These teams would be susceptible to play-making PGs, much like 2022 HOU's Snead did to ARI in the S16 with 21 PTS, 6 AST, because the roll-game in pick-n-roll becomes more of a threat with the guard chasing over the top. (I marked GONZ with question marks because I don't know who their 5th starter will be: If it is Gregg at the 5-spot, then the tower defense may be the best option.)

- Hedge and Recover: Teams with athletic big men that can show a presence on the other side of the screen and then recover to their defender (the screener). The hedge should deter any vision or dribble penetration while allowing time for the guard to re-establish defensive position. 2023 SDST employed the hedge-and-recover as their primary ball-screen defense in route to a NR run. Top 25 teams that could fit this build include MIST (Sissoko), ARK (Mitchell), SDST (Ledee), UNC (Bacot), BAY ("Everyday John"), and ALA (Pringle). These teams would be susceptible to ball-handlers who can exploit the spatial gap between the hedger and the recovering guard either with a quick-release, off-the-dribble shot (CONN Hawkins) or a pass between the two defenders to a cutter in the high post area (Jackson Jr.).

- Ice Defense: Teams with length in the off-ball positions will want to freeze the ball on one side of the court and use the off-screen defenders as help-side defense. They "ice the screen" by having the ball-defender aggress over-the-top of the screen before the screen arrives and use the screener's defender to deny a dribble-drive rim attack. 2018 LOYC used this defense in their infamous F4 run. Top 25 teams that could fit this build include DUKE, TENN, HOU, and TXAM. These teams would be susceptible to ball-handlers that can shoot off-the-dribble (MICH vs LOYC in 2018 F4, BAY vs HOU in 2021 F4, NOVA vs HOU in 2022 E8) or back-screens against help-defenders with a cross-court baseline-to-opposite-wing pass.

- Trap Defense: Teams with length/reach in the guard spots and athletic big men can trap the ball screen where both the ball-defender and the screener-defender chase the ball-handler in double-team fashion. Their length can deny vision and the trap can intimidate the ball-handler into picking up their dribble, further aiding the defense. 2011 VCU employed this trapping strategy (called "Havoc") into their F4 run. Top 25 teams that could fit this build are MARQ and MIA. These teams would be susceptible to taller ball-handlers with passing ability who can see over/around the trap as well as mobile big men who can flare away from the trap to provide a passing outlet and 4-vs-3 counter-attack to the trap. Rubs are another good method to counter the trapping defense, where the ball-handler passes to the screener before the screen is set and then rubs his defender off of the screener in order to receive the ball again.

- Switching Defense: Teams with interchangeability in players can simply switch assignments to defend the ball-screen so that the screener-defender now becomes the ball-defender and the ball-defender becomes the screener-defender. It allows the defense to keep pressure on the ball to deter outside shots as well as lock the screener to the perimeter to prevent the roll-game. 2023 FAU rode this style of defense to the F4. Top 25 teams that could fit this build are FAU and NOVA. These teams would be susceptible to back-to-the-basket post-players if a smaller guard (PG or SG) were to switch onto the post-player. In the NBA, they punish switching teams by setting a screen with a slower screener-defender and then attack the switch off-the-dribble which essentially becomes a foot-race to the rim. Even if a team isn't fully switchable for all 5 positions, they can still employ this defensive strategy on the spots which are interchangeable, but it does require experience and communication.

If there was one advice that I could give that would out-weigh everything else, re-read the blog and take notes. I've made and documented a lot of my correctable mistakes in seven years of PPB, and there's no reason for you to make them either. Though I've been wrong a lot, I've been right a lot more, so there should be plenty of wisdom in these countless paragraphs. Likewise, if you want to try to emulate my work, I've left plenty of details on how to do it. Though I've yet to pick a perfect bracket, I hope my work played some role in whoever eventually does. But for now, life calls upon my services, and like the tournament, we'll just have to wait and see how it plays out. As always, I greatly appreciate the time you take out of your life to read my blog. Farewell!

Follow-up to Comments

I was looking up some information on a few models and noticed the comments. First off, thanks for all of the well wishes. However, I do believe I've written my last article for PPB. My dad passed away five days before Christmas and Pete Tiernan moved on from college basketball analysis years ago. For me, it just doesn't bring the same enjoyment without my two biggest influences still around. Since this is too long to post in the comments section, I've just added it to the bottom of the article.

As for the questions, I'm still pretty confident CONN won't make it past the S16. Teams with athletic middle-men (SG/SF/PF/CF) give them fits (HALL & KU) and their defense is atrocious especially when compared to their 2022 counterpart. However, they are good enough to win two games in the first weekend, so I would hesitate to bet against them vs an 8/9 like I did against KU last year unless the 8/9 matches that description. No national champion in the Kenpom-era has advanced past the S16 in the next tournament except 2007 FLA who returned >95% of their 2006 production. The last reigning NC to actually make it to the S16 was 2016 DUKE, so it seems we are overdue.

MIST reminds me of 2023 UNC in many ways, so I am far less confident in that pick. While MIST is likely to make the tourney, their qualitative and statistical similarities to 2023 UNC having me doubting a 4-game winning streak in March. Tyson Walker needs to get healthy and better-than-his-true-form (if that's possible). Pathing would also be a welcome blessing: The East Region (Top 16 reveal) with UNC & IAST would be a good landing spot for MIST, KU would also be a favorable match-up in the West but DUKE not-so-much. I would also assume MARQ and BAY would be good match-ups too based on recent games. It just depends on how all these teams get seeded. There was nothing scientific about the prediction, just one of the many qualitative guarantees that haven't been guarantees in the last few years (1-seeds perfect against 16-seeds, Roy Williams always winning a R64 game, a National Champion not having transfers in the starting lineup, etc).

As for the models, you are welcome to the data which I can legally give out (i.e. - I don't think I can give out any subscription-based data like Kenpom/Torvik/Sagarin). Keeping them up-to-date will feel like a full-time job, but I enjoyed doing it so it didn't feel like work. Knowing how to apply them, knowing when they work and when they don't, and getting them ready before Bracket Crunch Week has been a trial-by-fire over the last seven years.

As for my thoughts on potential champs, I wouldn't look anywhere other than PUR, TENN or HOU. I don't trust PUR's backcourt, but statistically (except for the negative TO rate) they look like the favorite. I don't trust TENN's shooting numbers, but if Vescovi, Zeigler and Mashack can find the mark from the perimeter, they would be my favorite. I don't trust HOU's reputation or analytics. They've ran up the score on bad teams (30+ Marg of Victory against 9 clearly-not-in-tourney teams), and I believe this has inflated their metrics. As fundamental and dedicated as HOU is, their shooting numbers are as atrocious as CONN's defense and HOU still loses to the same recipe: Spread 'em Out, Shoot 'em Up and Send 'em Home (ALA and MIA from previous years). This year, they are 1-3 against defensive PGs (KUs Dajuan Harris, 1-1vs IAST's Lipsey, and TCU's switching defense and have 1 game vs KU remaining at home with KU's McCullar unlikely to play, likely HOU wins) which makes sense given how much of their offensive system runs through Snead, so look out for that element in their match-ups (like 2022 NOVA). If this year matches 2013, HOU best-resembles 2013 Champion LOU in many respects. 2013 was the last year without freedom-of-movement, so in my opinion, a team/style that won without freedom of movement is far less likely to win in this era of basketball with freedom of movement.

As for my other thoughts on the season, 2023 was historically bad for ratings. Even though the teams were from major viewing markets (NE U.S., FLA and Cali), no F4 team was in the top 2 teams from those regions (SYR/JOHNS, FSU/FLA, and USC/UCLA). I expect a lot more blue bloods in this year's E8 and F4 for ratings, and I wouldn't be surprised if the NCAA sent out a memo to officiating to ensure more blue bloods in the F4.

I doubt I could predict potential seeds of each team, but based on stats/metrics and the games I've seen, my list of R64 upset victims (1-6 seeds) would include ARI, IAST, BAY, OKLA, AUB, SCAR, UNC, and WISC. This doesn't mean all of them will lose in the R64 even if they get a top-6 seed since Cinderella-like opposition also matters. Likewise from the few models I've "short-cutted" so far, I would expect more upsets in later rounds (R32 and S16) than R64, similar to 2023 (4-3-3-1-0-0), so probably no more than 3 or 4 of the 8 teams.

As for the one thing I haven't studied (or want to study and probably still won't), 3P% and 3PR seem like an important indicator of tourney advancement potential with 3P% being slightly more important than 3PR. My hypothesis would be teams with high 3PR and low 3P% could be potential upset victims (aka poor shooting teams or poor shot selection), and teams with high 3P% and balanced 3PR (not top 30 but not bottom 250 either) could be potential deep-runs (+PASE). If I had to postulate an ideal metric, it would be one that best predicts the NBA Finals as if it were a 1-game single elimination tournament (the NCAA structure). In theory, if the college game becomes more like the NBA style of play, the only difference is the tournament structure. On any given night, a bottom-standing NBA team can knock-off a top-standing NBA team, but in a best-of-7 format, the better team will win four games faster than the worse team. I believe the 3-point shot will explain a lot of this variability in outcomes.

I've always wanted my work to play a role in predicting a perfect bracket, but with everything that's happened IRL, I don't think it would matter as much to me as it used to. I can't promise that I'll be back on here (unless I need something else from one of the articles), but in case I don't, good luck to you both Toz and Boston.